Hispar

A top list of web pages; not domains

Hispar is a top list of web pages. We go deeper than Alexa Top 1 Million, and give you URLs of internal pages instead of just the home page. We use paid search results from Google to compile Hispar, and provide it to the community.

Hispar is named after the Hispar-Biafo glaciers in the Karakoram mountains of Pakistan, the longest glacial system outside of polar regions. Hispar is a product of research at Duke University and MPI Informatics. Please don't forget to cite us!

Do you just measure the landing page?

When researchers measure characteristics of web sites, or evaluate their web-page-delivery optimizations, they often resort to testing just the landing pages of those web sites. They do it because it's quick and easy: lists like Alexa and Tranco provide top web sites, and each web site has a single landing page. Internal pages are, hence, largely ignored.

We reviewed five years (2015 to 2019) of research published at five of the top networking venues: ACM Internet Measurement Conference (IMC), Passive and Active Measurement Conference (PAM), USENIX Symposium on Networked Systems Design and Implementation (NSDI), ACM Special Interest Group on Data Communications (SIGCOMM), and ACM Conference on emerging Networking EXperiments and Technologies (CoNEXT). We used our lit_grabber tool to automatically fetch and search the PDF versions of papers published at these for keywords related to popular top lists. Then, we manually reviewed the papers that used top lists and assessed whether their findings would need to be revised if they were to apply to internal pages. We found that about two-thirds of the papers need some revision. Please see our paper for more details.

| Venue | Pubs. | #using list | Major revision | Minor revision | No revision |

|---|---|---|---|---|---|

| IMC | 214 | 56 | 9 | 23 | 24 |

| PAM | 117 | 27 | 7 | 10 | 10 |

| NSDI | 222 | 11 | 6 | 4 | 1 |

| SIGCOMM | 187 | 9 | 1 | 6 | 2 |

| CoNEXT | 180 | 16 | 7 | 5 | 4 |

Using a version of the Hispar list, we compared the landing pages of 1000 top web sites to a set of internal pages from those web sites and found significant differences across various dimensions.

Is the landing page enough?

We picked 1000 web sites from Alexa Top 1 million, and compared the landing page of each web site to 19 internal pages. We measured these pages across various performance, security, and privacy characteristics, and found that internal pages differ significantly from landing pages!

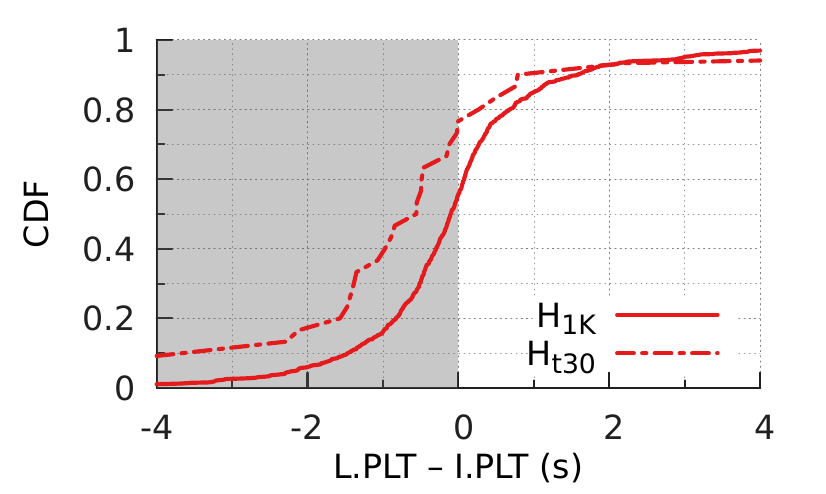

Fig. (a) plots the CDF of the difference between the page load time (PLT) of landing pages and corresponding internal pages for top 1000 web sites (H1K), and top 30 web sites (Ht30). The CDF shows that landing pages are faster than internal pages for 56% of the web sites in H1K, and for 77% in Ht30. This is despite landing pages being bigger, and having more objects than internal pages.

Fig. (b) plots the CDF of bytes in different content types, HTML and CSS (HTM/CSS), images (IMG), and JavaScript (JS) as a fraction of total page size. It shows that internal pages, in the median, have 10% more JS bytes, 22% HTM/CSS bytes, and 36% less image bytes.

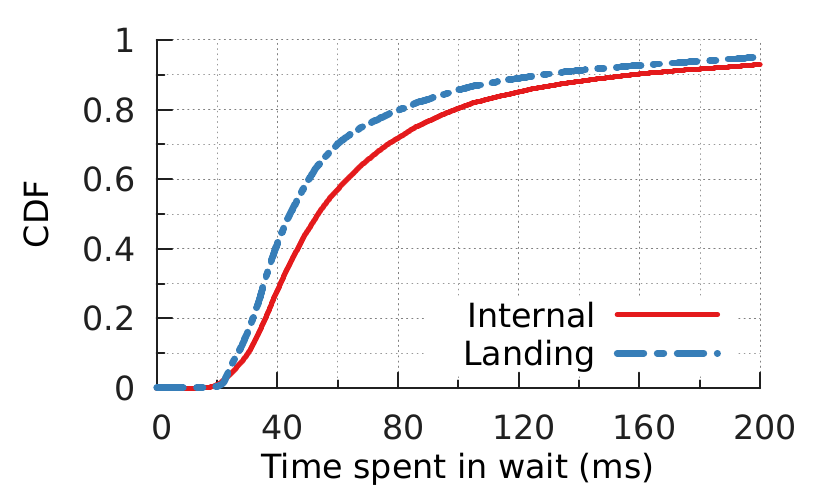

A web request spends various amounts of time in different stages: connect, wait, response etc. wait is the amount of time between the last byte of the request being sent, and the first byte of the response being received. Fig. (c) plots the CDF of wait times for each request on the internal pages vs. landing pages. It shows that requests on internal pages spend 20% more time in the wait stage, perhaps indicating more cache misses at the CDN.

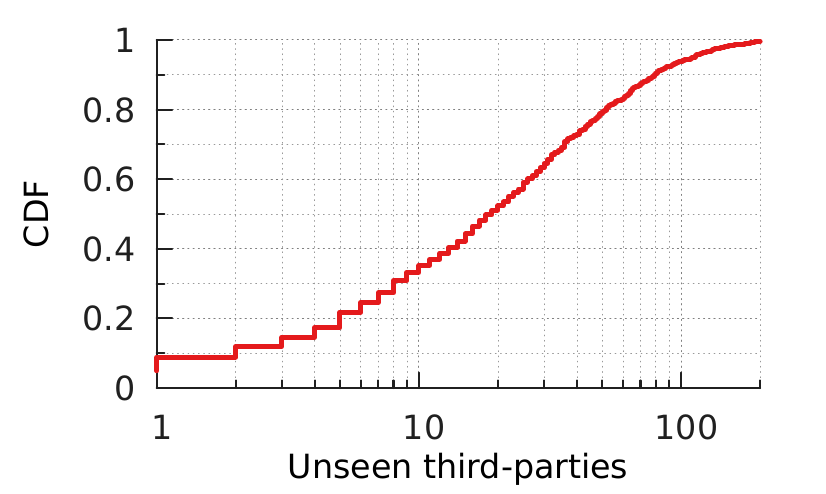

While loading a page from one domain (e.g. nytimes.com), the browser may fetch resources from third-party domains (e.g. facebook.net). We found that internal pages often load resources from third-parties that are never observed on the landing page. Fig. (d) plots the CDF of the number of third parties observed on internal pages, but not on the landing page of the same web site.

(a)

(a)

(c)

(c)

(a)

(a)

(c)

(c)

In the paper, we compare landing pages and internal pages over nine different dimensions, and for each, we discuss the impact on past research.

Data set

We release our data set and all tools necessary for reproducing our results here.

Building Hispar

To build the Hispar list of internal pages, we use search results from the Google Custom Search API. Although the exact methodology of search engines for crawling and ranking results is not clear, the general approach is well known. Search engines crawl web sites almost exhaustively (barring pages excluded in robots.txt or those behind a login), implement some form of a quality metric (e.g. Google PageRank assesses quality by observing links posted on other high-quality web sites), and take user inputs into account (what people search for and click on).

We bootstrap the Hispar list with the Alexa Top 1 million. In descending popularity rank, for each web site ω, we queried the Google API for "site:ω" and picked the first 50 unique results. We set the location to the U.S., and restricted the results to pages in the English language. The final list has 100,000 pages from approximately 2100 web sites.

We update the Hispar list weekly. The latest list can be downloaded here, whereas an archive is maintained here.

The list has the following format:

<alexa_rank> <search_rank> <url>search_rank is 0 when url is the landing page of the web site. Although we include search_rank in the list, we recommend against inferring any ordering of URLs for the same web site.

Contact

If you use the provided or a customized version of Hispar, please consider citing us:

@InProceedings{Aqeel-IMC-2020,

author="Aqeel, Waqar and Chandrasekaran, Balakrishnan and Maggs, Bruce and Feldmann, Anja",

booktitle="Internet Measurement Conference",

publisher="ACM"

title="On Landing and Internal Pages: The Strange Case of Jekyll and Hyde in Internet Measurement",

year="2020",

}

Please feel free to contact us (waqeel [at] cs [dot] duke [dot] edu) for any questions or feedback.